AI Generated Content Regulation in India | 25 Oct 2025

This editorial is based on “ Synthetic media: On labelling of AI-generated content”, which was published in The Hindu on 24/10/2025. The article discusses India’s proposed amendments to the IT Rules, 2021, which mandate clear labelling of AI-generated or synthetic content across social media platforms to curb deepfakes, enhance transparency, and protect public trust in digital information.

For Prelims: Deepfakes, Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, Global Cybersecurity Outlook 2025, Information Technology Act, 2000 (IT Act), Digital Personal Data Protection Act, 2023 (DPDP Act), Bharatiya Nyaya Sanhita, 2023 (BNS), Indian Cyber Crime Coordination Centre (I4C), CERT-In.

For Mains: Key Features of the Proposed IT Rules Amendment for AI-generated Content Regulation in India, Key Factors Contributing to the Rising Threat of Deepfakes and Online Misinformation in India, India’s Legal and Institutional Framework to Tackle Deepfakes and Cyber Crimes

In the digital age, the rise of AI-generated content has transformed how information is created and consumed, bringing unparalleled innovation alongside serious challenges. India's proposed mandatory labelling of synthetic media reflects an urgent response to the escalating risks of deepfakes and misinformation that threaten public trust and democratic processes. With concerns about misuse, accountability, and transparency at the forefront, this policy aims to empower users to discern authentic content while balancing innovation with regulation in a rapidly evolving technological landscape.

What Are the Key Features of the Proposed IT Rules Amendment for AI-generated Content Regulation in India?

- Mandatory Labelling of AI-Generated Content: The Ministry of Electronics and Information Technology (MeitY) has proposed amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 to address the misuse of synthetically generated content, including deepfakes.

- The new rules require mandatory labelling of all content that is artificially or algorithmically created, generated, modified, or altered by AI tools in a way that appears authentic or real.

- Labelling Requirements:

- For visual content, labels must cover at least 10% of the total display area.

- For audio content, labels must be audible during at least 10% of the total duration.

- A permanent metadata identifier or watermark must be embedded to ensure traceability and prevent tampering.

- User Declaration: Platforms must obtain a declaration from users at the time of uploading whether their content has been created or modified using AI or any synthetic process.

- Technical Verification: Platforms must deploy “reasonable and proportionate technical measures”, such as automated detection tools, to verify the accuracy of user declarations.

- Shared Responsibility: Both creators and hosting platforms (such as YouTube, Instagram, and X) are equally responsible for ensuring proper labelling and may lose safe harbour immunity if they fail to comply.

- Scope of Coverage: The definition of “synthetically generated information” covers text, images, video, and audio, expanding beyond traditional deepfakes to include all computer-generated modifications.

- Implementation and Feedback: The draft was opened for public and industry feedback until November 6, 2025, after which it will be finalised.

What are the Key Factors Contributing to the Rising Threat of Deepfakes and Online Misinformation in India?

- Proliferation of Advanced AI Software and Deep Learning Techniques: The availability of open-source deepfake creation tools like DeepFaceLab and ChatGPT has made it easier for malicious actors to produce hyper-realistic videos and audio.

- For instance, in 2024, over 8 million deepfake files were detected globally, a 16-fold increase from 2020, with India witnessing a significant surge.

- Deepfake cases in India have surged by 550% since 2019, with losses projected at ₹70,000 crore in 2024 alone, underlining the growing economic and security threat posed by synthetic media.

- These tools enable easy creation of politically motivated fake videos, such as doctored speeches of leaders, complicating verification efforts.

- Escalating Financial Frauds and Cybercrimes: Deepfake technology is increasingly used for financial scams, voice phishing, and identity theft.

- Globally, deepfake fraud attempts have increased by a whopping 31 times in 2023 — a 3,000% year-on-year increase.

- An Indian case in July 2023 involved a 73-year-old man fooled by a deepfake video of his former colleague, leading to a ₹40,000 scam (about USD 500).

- Manipulation of Electoral Processes and Political Misinformation: Deepfakes are exploited to influence elections by spreading false speeches, forged interviews, and doctored video endorsements.

- During India’s 2024 general elections, fake videos of politicians were disseminated across social media platforms, eroding public trust and posing risks to democratic integrity.

- Such misinformation campaigns are often designed to polarise voters or discredit opponents.

- Platforms like WhatsApp, Telegram, and TikTok facilitate quick dissemination of deepfakes, often before media outlets or authorities can verify content.

- Inadequate Legal and Regulatory Frameworks: Although India considers existing laws under the IT Act,2000 and the Digital Personal Data Protection Act,2023 to address deepfakes, there is no comprehensive law specifically targeting deepfake misuse.

- For example, Indian courts have recognised the severity of deepfake misuse but have yet to establish definitive legal deterrents.

- Legislative gaps allow offenders to operate with relative impunity, emphasising the need for specialised regulation.

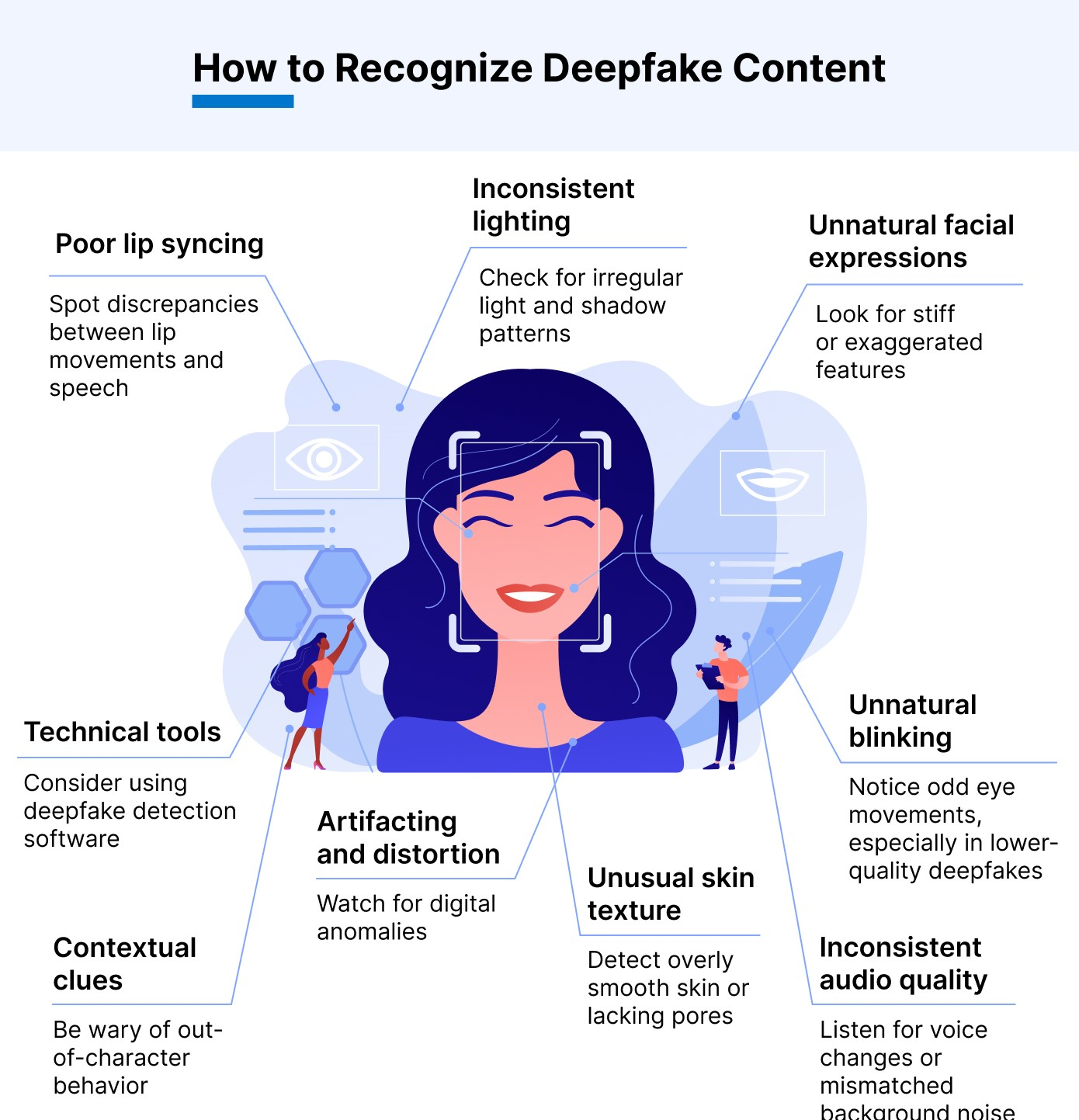

- Lack of Awareness and Limited Digital Literacy: Despite rising prevalence, the general public remains largely unaware of deepfake threats.

- According to a 2023 McAfee survey, 47% of Indians have either been victims or know someone who has been affected by deepfake scams—this is one of the highest percentage worldwide.

- According to McAfee, 70% of people aren't confident they can distinguish between a real and AI-cloned voice.

- This lack of awareness makes individuals more susceptible to falling prey to misinformation.

- As the World Economic Forum's Global Cybersecurity Outlook 2025 emphasizes, the deepfake threat represents a critical test of our ability to maintain trust in an AI-powered world.

- This erosion of trust can destabilise social cohesion, especially when false videos are used to incite violence or spread misinformation.

How is India Equipped Legally and Institutionally to Tackle Deepfakes and Cyber Crimes?

- Legal Framework: India has enacted multiple laws to address online harms, including deepfakes.

- Information Technology Act, 2000 (IT Act): The IT Act addresses offenses related to identity theft (Section 66C), impersonation (Section 66D), privacy violations (Section 66E), and transmission of obscene or illegal content (Sections 67, 67A).

- It also empowers the government to issue blocking orders (Section 69A) and mandates intermediaries’ due diligence (Section 79).

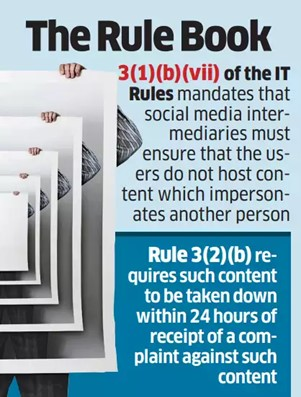

- Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (IT Rules, 2021): These Rules, amended in 2022 and 2023, explicitly hold intermediaries (social media platforms and others) accountable to prevent hosting or transmission of unlawful or synthetic content.

- They require swift removal of such content, appoint grievance officers, and ensure transparent grievance redressal through Grievance Appellate Committees (GACs).

- Recent advisories emphasize removing misinformation, including deepfakes, and educating users on synthetic content.

- Digital Personal Data Protection Act, 2023 (DPDP Act): This Act ensures lawful processing of personal data with user consent and security safeguards.

- Bharatiya Nyaya Sanhita, 2023 (BNS): Section 353 penalises the spread of misinformation causing public mischief, while organised cybercrime involving deepfakes can be prosecuted under Section 111, enabling broader law enforcement scope.

- Information Technology Act, 2000 (IT Act): The IT Act addresses offenses related to identity theft (Section 66C), impersonation (Section 66D), privacy violations (Section 66E), and transmission of obscene or illegal content (Sections 67, 67A).

- India’s Multi-Layered Cyber Response Ecosystem: India employs institutional, regulatory, reporting, and public awareness mechanisms to tackle cyber crimes, user grievances, and unlawful content, including deepfakes:

- GACs (Grievance Appellate Committees): Provide appellate forums to challenge decisions of intermediaries.

- Indian Cyber Crime Coordination Centre (I4C): Coordinates cyber crime actions across States, empowers agencies to issue removal notices under IT Act read with the IT Rules, 2021.

- SAHYOG Portal (managed by I4C): Enables automated, centralised removal notices to intermediaries; all authorised agencies use it to remove unlawful content.

- National Cyber Crime Reporting Portal: Citizens can report incidents, including deepfakes, financial frauds, and content misuse, through this portal.

- CERT-In: Issues guidelines on AI-related threats, including deepfakes; issued an advisory in Nov 2024 for protection measures.

- Police: Investigate cyber crimes.

- Awareness Campaigns: MeitY organises Cyber Security Awareness Month (NCSAM), Safer Internet Day, Swachhta Pakhwada, and Cyber Jagrookta Diwas (CJD) to educate citizens and the cyber community.

What Further Steps can India Adopt to Enhance Its Framework Against the Rising Threats of Deepfakes and Cybercrimes?

- Stringent Legal Regulations and Clear Definitions: Countries should develop precise legal frameworks that criminalise malicious deepfake use, including unauthorised impersonation and misinformation.

- India’s proposed amendments to the IT Rules emphasise mandatory labelling and traceability, similar to international norms in the EU’s AI Act and the UK’s Online Safety Bill.

- Clear legal definitions of “synthetically generated information” will help in enforcement and legal accountability.

- Mandatory Content Labelling and Metadata Embedding: Enforce the use of prominent labels and metadata on all AI-generated content to assist users in identifying synthetic media.

- India's draft rules mandate at least 10% surface coverage for visual labels and audio markers, aligning with global practices like the EU’s AI Act, which requires machine-readable metadata.

- Technical measures should include watermarks, digital signatures, or embedded metadata that cannot be altered or removed.

- Establishment of Specialised Regulatory Bodies: Create dedicated institutions akin to India’s GAC (Grievance Appellate Committee) and CERT-In to oversee compliance, handle complaints, and coordinate responses globally.

- International agencies like the US’s FTC (Federal Trade Commission) and the UK’s ICO (Information Commissioner’s Office) serve as models for oversight, investigation, and enforcement.

- Technological Solutions: Invest in and promote the development of AI-based detection algorithms, blockchain verification, and content authenticity platforms, as seen in efforts by companies like Amber Video and Deeptrace.

- These tools can automatically flag or filter synthetic media, assist law enforcement, and verify content authenticity in real time.

- International Collaboration and Data Sharing: Form multilateral alliances like INTERPOL’s Cybercrime Directorate or regional cooperation frameworks to share data, threat intelligence, and best practices on deepfake mitigation.

- Harmonised standards and joint operations can prevent cross-border misuse and limit the spread of malicious content.

- Incentivise Ethical AI Development and Industry Responsibility: Encourage AI developers and platforms to adopt ethical standards, transparency policies, and compliance certifications.

- India’s draft rules require AI tools to embed watermarking and labels from the point of creation.

- International practices also recommend industry-led self-regulation, such as the Partnership on AI, promoting responsible innovation.

- Public Awareness and Digital Literacy Campaigns: Governments and civil society should run continuous awareness campaigns educating users about deepfakes, their risks, and verification techniques.

- India’s Cyber Jagrookta Diwas and Global Internet Trust campaigns exemplify successful initiatives.

- Enhancing digital literacy reduces susceptibility to misinformation and deepfake manipulation.

Conclusion :

As Microsoft President Brad Smith argued, “ AI could be an incredible tool, but there should be guardrails to protect against abuse, misuse, or unintended consequences.” The rise of deepfakes and cybercrimes demands vigilant, multifaceted responses that align legal, technological, and societal efforts globally. Moving forward, India must strengthen international collaboration, invest in detection technologies, enhance digital literacy, and refine laws to safeguard democracy, individual rights, and digital trust.

|

Drishti Mains Question: “In an age where pixels can lie and voices can deceive, truth itself risks becoming negotiable.”Discuss how India’s legal and ethical frameworks can preserve informational integrity in the era of deepfakes and synthetic media. |

Frequently Asked Questions (FAQs)

1. What are the mandatory labelling requirements under the IT Rules amendment?

Visual content must cover ≥10% display area, audio content ≥10% duration, with permanent metadata or watermark for traceability.

2. Which factors are driving the rise of deepfakes in India?

Advanced AI tools, financial frauds, political misinformation, social media proliferation, limited digital literacy, and inadequate legal frameworks.

3. How is India legally equipped to tackle deepfakes?

Through the IT Act, 2000, IT Rules, 2021, DPDP Act, 2023, and Bharatiya Nyaya Sanhita, 2023, covering identity theft, privacy violations, and misinformation.

4. What institutional mechanisms exist to address cyber crimes and deepfakes in India?

GACs, I4C, SAHYOG Portal, CERT-In, National Cyber Crime Reporting Portal, police, and awareness campaigns like Cyber Jagrookta Diwas.

5. What further measures can India adopt to counter deepfakes and cyber crimes?

Stringent laws, mandatory labelling, AI detection tools, regulatory bodies, international collaboration, ethical AI promotion, and digital literacy campaigns.

UPSC Civil Services Examination, Previous Year Question (PYQ)

Prelims:

Q. With the present state of development, Artificial Intelligence can effectively do which of the following? (2020)

- Bring down electricity consumption in industrial units

- Create meaningful short stories and songs

- Disease diagnosis

- Text-to-Speech Conversion

- Wireless transmission of electrical energy

Select the correct answer using the code given below:

(a) 1, 2, 3 and 5 only

(b) 1, 3 and 4 only

(c) 2, 4 and 5 only

(d) 1, 2, 3, 4 and 5

Ans: (b)

Mains:

Q. Discuss different types of cyber crimes and measures required to be taken to fight the menace. (2020)